Introduction

Nowadays, analytics is a strong asset of any organization. It drives business insights and adds

value to the business. As a result, organizations should deploy analytics solutions for collecting,

transforming and storing data. They are choosing powerful and reliable tools in order to deliver

actionable insights right in time.

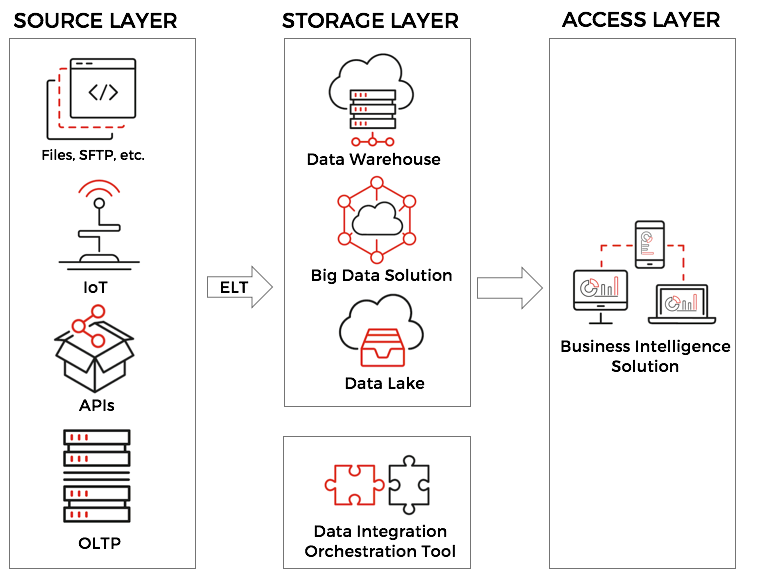

Usually, modern analytics solution consists of multiple elements and have common

architecture:

As you might see this architecture has multiple layers:

- Source Layer – this layer is representing your business through data

- Storage Layer – this layer is representing Data Warehouse and complimentary

technologies such as Hadoop or Data Lake. - Access Layer – this layer is representing your end users, mostly business users who

consume insights from the data and make business decisions.

At the bottom, we have a key element that helps wheels rolling. You might think about this as

a heart of your Analytics Solution. As a result, you should choose it very carefully. There are

lots of tools available on market and they all will tell you the same story that they are the

best. Unfortunately, this isn’t true.

We tried a number of various ETL/ELT cloud and on-premise tools and found that Matillion

ETL is a true leader and allows you to get fast value and keep strong SLA for the Data

Warehouse solution.

Problem Statement

In this article, we want to go through one of the most common problems that we spot at every

project. Usually, organizations tend to use multiple independent vendors for their BI

solution. As a result, there could be a gap, especially between Data Warehouse and

Business Intelligence tool.

Let’s understand this situation better through the example below. In one of our projects,

a customer had the cloud data warehouse and use Matillion to load it. Customer was using

Tableau as a primary BI tool.

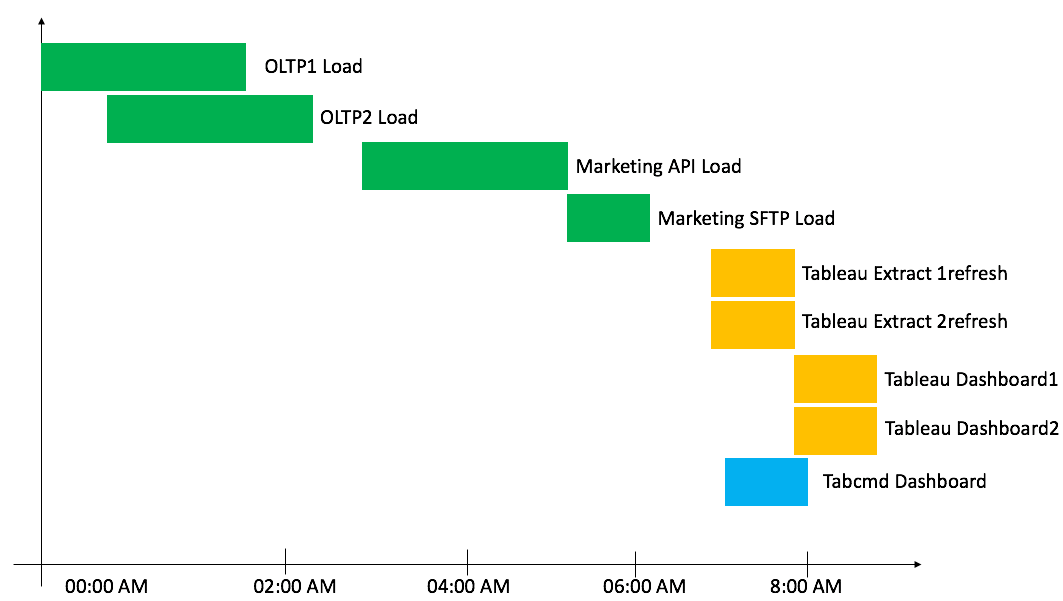

Let’s look at the ideal ELT run:

There are two individual processes here. The green one is Matillion, that is scheduled via

Matillion Scheduler. The orange one is Tableau and it is scheduled via Tableau Server.

Usually, we ASSUME that ETL is done at 6 am and we scheduled Tableau Extracts and

Dashboards a bit later. In our example, it is 7 am. In addition, we are using Tabcmd and

schedule Tableau Reports via Windows Task Scheduler.

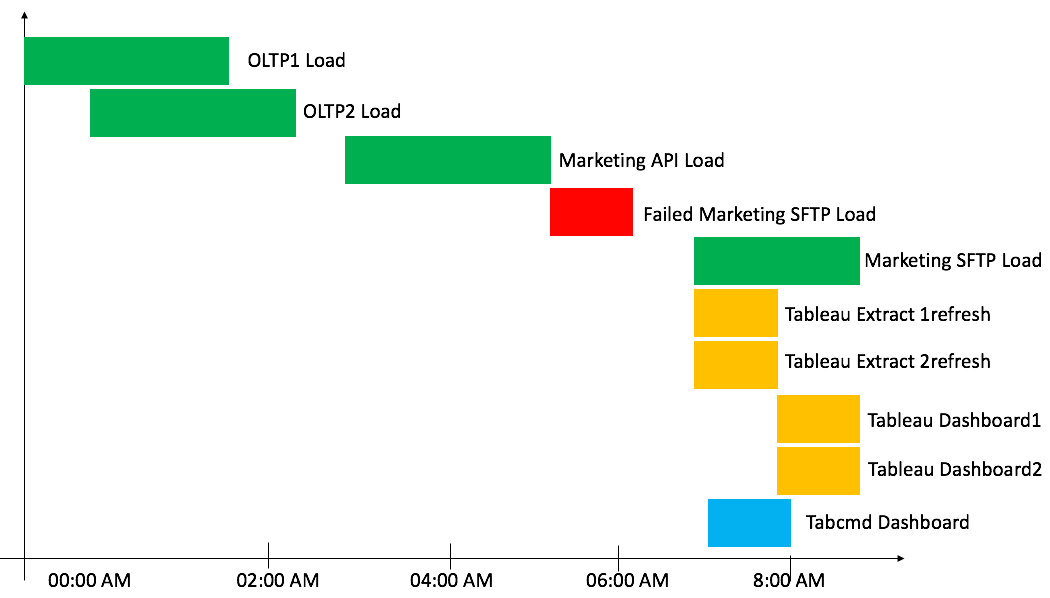

As you might guess, a marketing data source isn’t the most reliable one. Let’s consider

a scenario when SFTP was delayed files delivery. As a result, ELT job was failed and

automatically restarted later at 7 am:

In our scenario, ELT process was finished around 9 am. This should be the time of triggering

BI reports and refreshes exports.

As a result, business users got their dashboards with inconsistent data and they usually send

all ELT/DW emails into a spam folder. Based on our experience, around noon, users will be

realized that they spent half of the workday for nothing by working with inconsistent data.

The solution is obvious, but unfortunately, it is very rare.

Solution

Having extensive experience with BI, out team want to address this problem and offer best

practices to handle such this issue. This practice could be used for any BI tools. The main

goal is to connect ELT job successful finish with triggering of BI activity.

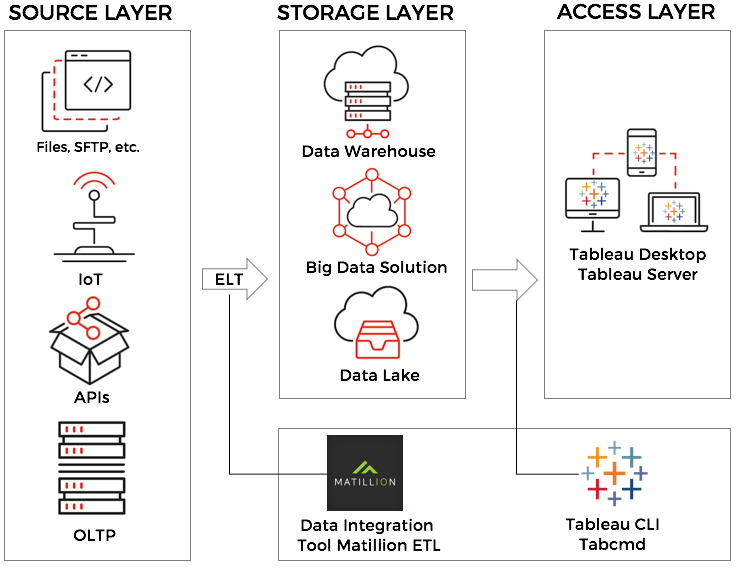

In our case, we will consider integration Matillion ETL and Tableau Server. There is an

updated architecture:

Tableau has a powerful tool TABCMD. It is command line utility which can use to automate

administration task and allows us to control Tableau Server. In 2017 Tableau was released

Tableau Server for Linux as well as Tabcmd for Linux. Previously, there was a workaround,

that allows us to use Tabcmd.jre from Windows for Linux use cases.

Moreover, Matillion released Shared Job component that allows us to create custom

components and make them nice for end users.

In this post, we will learn how to deploy Tabcmd for Matillion ETL as well as how to create

a new Matillion component using shared jobs. We are using Matillion on AWS. You can create

the same solution using Matillion on Microsoft Azure.

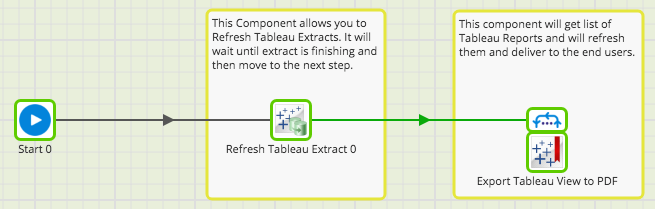

We will create custom Tableau components:

We might trigger these components using AWS SQS or insert directly into the Matillion Job.

The result of Tableau export could be written into Amazon S3 bucket or you might mount

shared folder.

How to

Let’s learn how we can do this. There are multiple steps:

1. Deploy Tabcmd for Linux on Matillion ETL

2. Create Matillion Shared Job

3. Integrated Matillion and Tableau via SQS

Deploy Tabcmd for Linux on Matillion ETL

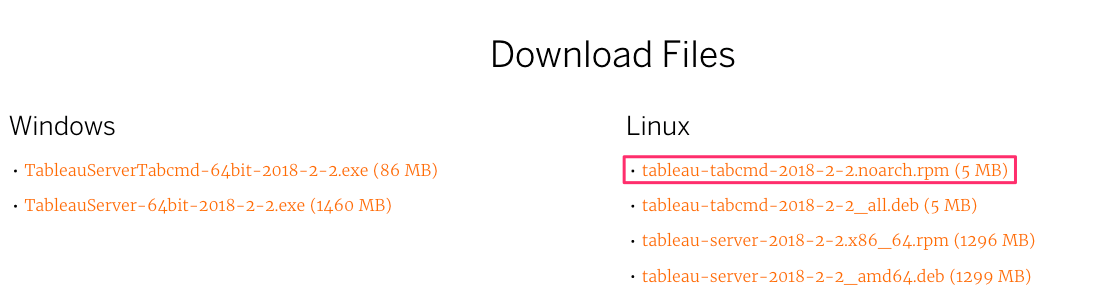

Since Tableau released Tableau on Linux, we don’t need any more spending time on converting

Windows Tabcmd for Linux. Now, we will go to the Tableau Releases website

(https://www.tableau.com/support/releases) and download Tabcmd for Linux. We should

download the same version as our Tableau Server. In our case, it is 2018.2:

We will download RMP archive because Amazon Linux has lots in common with Red Hat.

Then we should upload this into EC2 instance with Matillion. There are multiple ways to do

this. For example, the fastest way for us to use AWS CLI S3. We will upload the file into the S3

bucket and then download it from EC2 instance.

Next, we should install this archive on the EC2. Go to the location of the archive and execute this

command or specify the full path to the file:

sudo rpm -Uvh tableau-tabcmd-2018-2-2.noarch.rpm

As a result, we install Tabcmd for our Linux. Now, we want to make sure, that everything works

as expected.

Note:

It is important that Tableau and Matillion can see each other from a Network point of view. We

recommend deploying your Data Analytics solution using the same AWS account and the same

region. In case, if you have to use other topology, you might need to configure access.

In order to test we can do the following commands – login to Tableau Server and trigger

extract. Also, you might execute any other Tabcmd command. You can find a list of commands

at Tableau Official Documentation

#matillion is running under tomcat user and we will switch to this user

sudo -su tomcat

#go to tabcmd location

cd /opt/tableau/tabcmd/bin

#login tableau server

./tabcmd login -u Admin -p 'p@ssword' -s https://myserver:443 --no-certcheck --

accepteula

===== Creating new session

===== Server: https://myserver:443

===== Username: Admin

===== Connecting to the server...

===== Signing in...

===== Succeeded

#refresh extract

./tabcmd refreshextracts --datasource 'My Sexy Data Source' --project 'My project' --

no-certcheck –synchronous

===== Continuing previous session

===== Server: https://myserver:443

===== Username: Admin

===== Scheduling extracts for datasource 'Data Extracts/Amazons YTD ATP Buys' to

be refreshed now...

===== Finished refresh of extracts (new extract id:{334BA063-F5B2-477D-A81F-

660B0227ECE8}) for Data Source ‘My Sexy Data Source'

We are using the following Tabcmd parameters:

- –no-certcheck – we need this in case of SSL

- –accepteula – this is a new parameter, that was introduced recently

- -u – Tableau username who has permissions to do perform the desired action

- -p – Password

- -s – Tableau Host or Load Balancer endpoint

- –datasource – Tableau Data Source

- –project – Project where Data Source is stored

- –synchronous – this parameter will wait for feedback from Tableau server about the end of the

Tableau Extract refresh. This allows us to execute jobs in the chain.

As a result, we can trigger Tableau from Matillion EC2. We even can copy this logic into the

Matillion Bash component, but it will hard for business users to go through it and self-serve.

Create Matillion Shared Job

In order to simplify the job of end users, we will leverage Matillion Shared Jobs and

Matillion Variables .

The main purpose of Shared Job is to bundle entire workflows into a single custom component.

We will create 2 custom components:

- Refresh Tableau Extract

- Export PDF Dashboard to S3 bucket

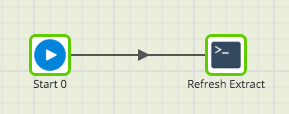

Before we start, we should create new Orchestration Job for each use case and then we can

insert Matillion Variables and create Shared Job.

- Create new Orchestration job with bash component and name it “Refresh Tableau

Extract”:

Bash component has Timeout parameter. By default, it is 1000 seconds. For extract, we

might increase this in order to wait while your biggest extract will refresh.

Then paste the code that we tested already and replace Tableau objects with Matillion

parameters:

#go to tabcmd location

cd /opt/tableau/tabcmd/bin

#login tableau server

./tabcmd login -u Admin -p '${password}' -s ${tableu_host} --no-certcheck --

accepteula

#refresh extract

./tabcmd refreshextracts --datasource "${data_source_name}" --project

"${project_name}" --no-certcheck –-synchronous

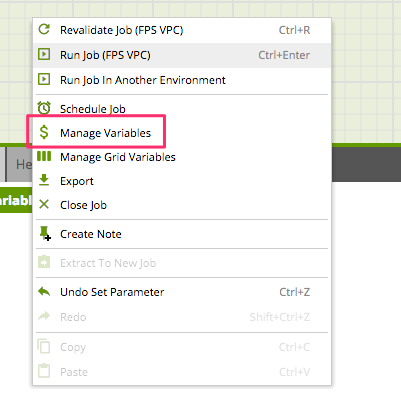

As a result, this component will refresh Tableau Extract based on value for the variable. In

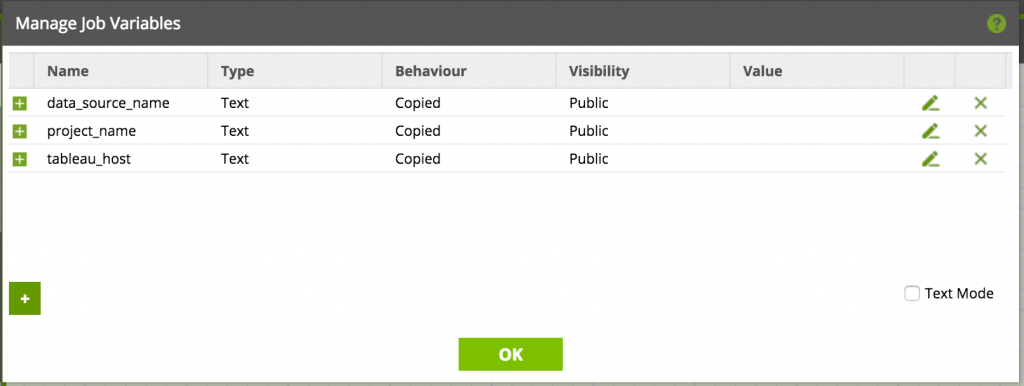

addition, we should create Matillion Variable for our parameter. Click right button on

canvas and choose “Manage Variables”.

Then add 3 new variables. They have to be public:

2. Create one more job or duplicate existing one and name it “Tableau Export PDF”. Enter

the following code with the Matillion Parameters:

#go to tabcmd location

cd /opt/tableau/tabcmd/bin

#login tableau server

./tabcmd login -u Admin -p 'p@ssword' -s ${tableu_host} --no-certcheck --

accepteula

#export pdf from Tabelau Server

./tabcmd export "${tableau_view_name}" --pdf --pagelayout landscape -f "/tmp/$(date

+%Y%m%d)_${tableau_report_name}.pdf" --no-certcheck

#upload pdf to the S3

aws s3 cp /tmp/$(date +%Y%m%d)_${tableau_report_name}.pdf s3://${bucket_name}/$(date +%Y%m%d)/$(date +%Y%m%d)_${tableau_report_name}.pdf

#clean out

rm /tmp/$(date +%Y%m%d)_${tableau_report_name}.pdf

This script will export Tableau View into /tmp location on our EC2 and the then will

upload to the Reporting Bucket via AWS CLI. Moreover, it will automatically create a folder in Bucket with the date. In addition, we specify the file name according to our

naming convention.

Moreover, you should create variables in the same way as in step #1:

- tableau_report_name

- tableau_view_name

- tableau_host

- bucket_name

You might see how this solution is flexible and you can achieve much different use

cases.

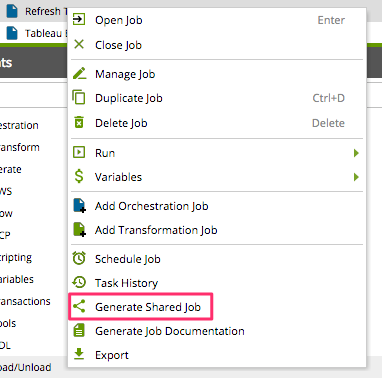

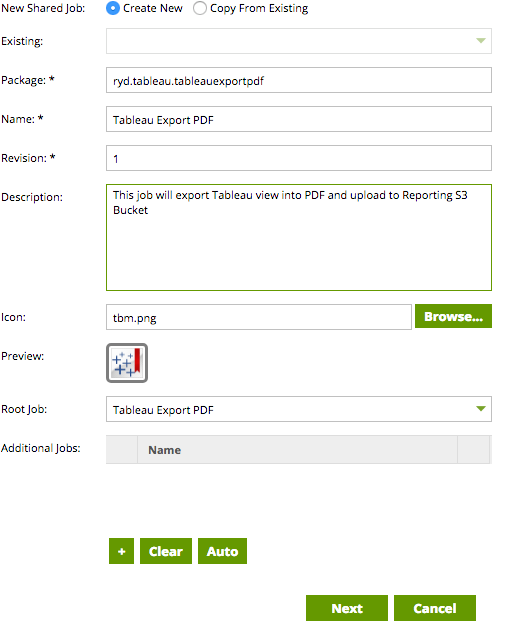

3. Now we can create the Shared Jobs and wrap our Orchestration jobs. Click right button

on job name and choose “Generate Shared Job”:

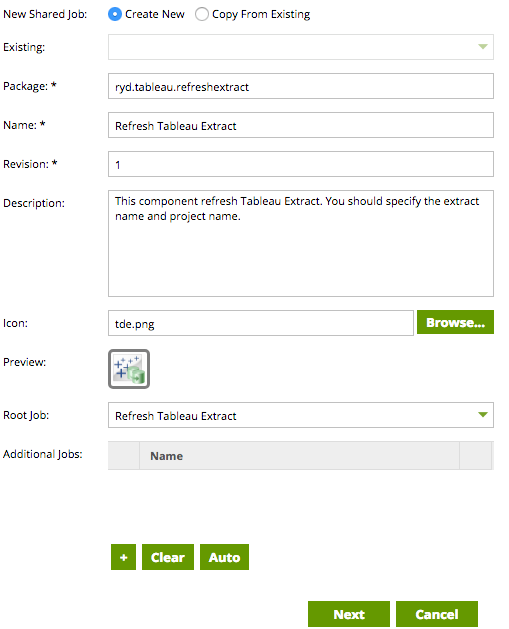

Then we should fill the form and choose the image (our the most favorite step):

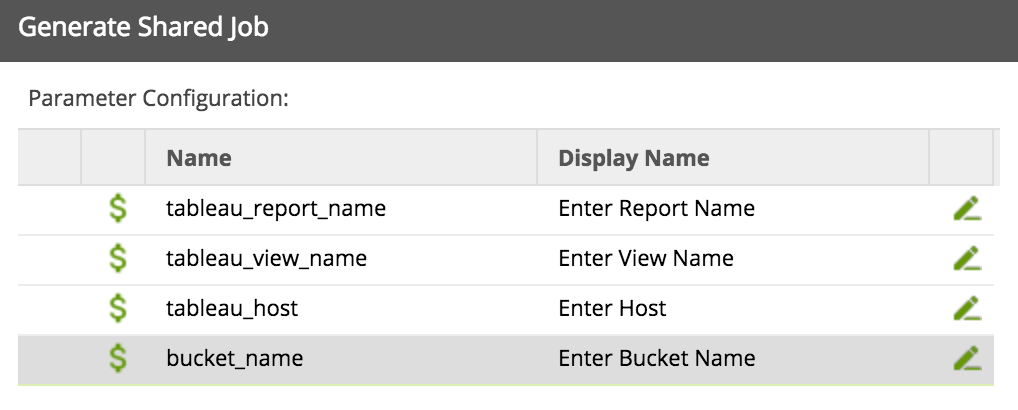

Click Next and you will see Parameter Configuration step:

Then click Ok.

4. Then do the same for the 2nd Job Tableau Export PDF:

Click Next and fill the Parameter Configuration page and click Ok.

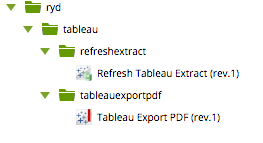

5. Let’s check out jobs. Go to Shared Jobs Pane -User Defined and expand “ryd” one:

As a result, you’ll see our new jobs.

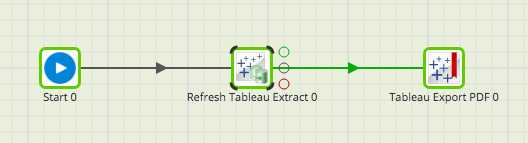

6. Let’s build all together. Create new Orchestration job and drag and drop our new shared

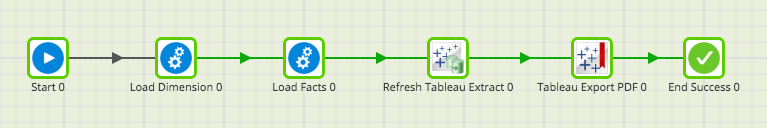

components:

Usually, one extract can source many different workbooks. As a result, we can use

another powerful feature of Matillion – Fixed Iterator

Let’s add fixed iterator on top of the job and it will allow us to specify multiple reports at

once:

As a result, with created new custom components that looks very friendly and familiar

for the end users. Using this approach we can leverage any Tabcmd command and

create a custom component for it.

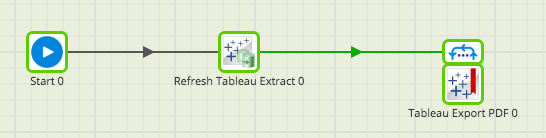

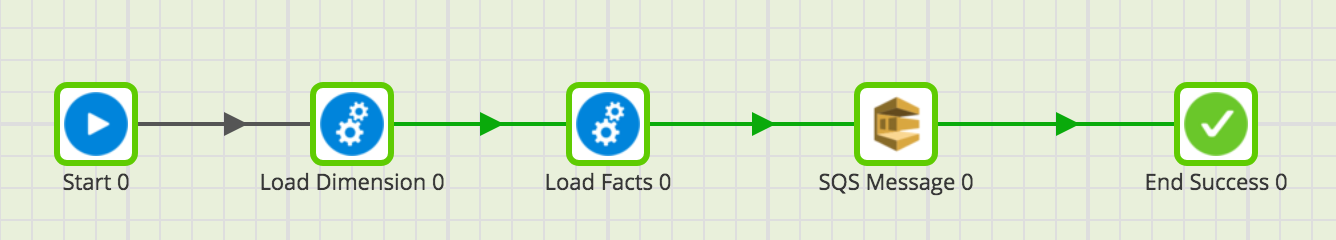

Integrated Matillion and Tableau via SQS

In order to address the initial problem, we should connect our main ELT process with

Tableau Shared job. Having shared jobs it could be done very easily. There are 2 ways of

doing this:

1. Drag and Drop Tableau Shared job into the end of ELT job. For example, when

your fact and dimension tables finish loading, you will trigger Tableau:

2. Use SQS component

In order to trigger a dedicated job in Matillion that will

trigger Tabcmd.

Summary

In this article, we learned about the importance of integration BI and ELT/ETL as well

as learn how to deploy Tabcmd on Amazon EC2 instance with Matillion. Finally,

we used Shared Jobs and created Custom Matillion components in order to allow

end users easily work with Matillion and Tableau.

Appendix

- Matillion Job is a rows jobs based on default components that consist of Bash Components and keep the logic for Shared Jobs.

- Shared Jobs actual shared jobs that you can reuse or modify.

About Matillion:

Matillion is the only software company that offers purpose-built, native

ELT solutions for cloud data platforms; our products provide greater speed for our customers as they

develop the insights that power their organizations.

About Tableau:

From connection through collaboration, Tableau is the most powerful,

secure, and flexible end-to-end analytics platform for your data. Elevate people with the power of

data. Designed for the individual, but scaled for the enterprise, Tableau is the only business

intelligence platform that turns your data into insights that drive action.